This is Part 3 of a series, if you have not read parts 1 or 2 you can do so here:

ReFS vs NTFS – Introduction (Part 1)

ReFS vs NTFS – Initial Analysis (Part 2)

4K blocks aside then, what else have we learnt?

If we look at the 64K repositories over the 8 weeks of testing, we can make a direct comparison between the various compression settings that make sense to deploy in the real world:

Why does the Lego man at the beginning of the NTFS graphs transform into a somewhat offensive gesture?

Initially, the settings in Windows deduplication were set to deduplicate files older than 5 days old, I changed it to 1 day old (where the 3rd Lego man appears chopped in half). That aside, it is clear at this point there is an additional overhead required to use uncompressed to avail of the benefits realised through deduplication.

Realistically, to see any benefit you would need 2.5 to 3 times the amount of used capacity on your platform to be able to ingest data. Bear in mind we also need to allow for unexpected increases in data, file server migration, DB cluster rebuild, Exchange DAG rebuild or upgrade, and all these things create relatively huge amounts of changed data “over the norm”. However, such a repository would yield huge retention capabilities.

Don’t forget, Veeam, like every other backup system on the planet, does not know what causes huge amounts of changed data if present in CBT, which is short for Change Block Tracking. This is the mechanism used by all backup software to detect changes to a physical or virtual server hard drive at the block level.

Additionally CBT does not explain that new VMs are a new SQL cluster / Exchange DAG node or upgrade / malware infection etc. You really need to scope in some breathing room for unexpected bursts in changed data. Furthermore, it is now impossible to ignore not only the performance of ReFS but the predicable trend line it provides.

Either way it is a good idea to try and scope enough flash storage in the caching layer, to allow for a burst of speed when ingesting data and performing transforms. If you can handle your daily change rate plus a comfortable overhead, you should be in a good position.

At what point commercially, does it become more effective to simply add more space and enjoy faster backup copies and restores using ReFS vs the additional capacity and hardware support requirements of NTFS?

Great question, and to quote every single person who has provided me with IT training over the years, “it depends”. If you remember back to Part 1 I referred to the organic nature of NTFS and ReFS repository configurations, it is impossible to calculate this on a general basis, you must take everything in a platform into consideration to give an accurate result. That being said, you also need to weight the speed of ReFS vs the capacity benefits of NTFS.

I know some people prefer line graphs so here is one comparing all 64K repositories:

From this we immediately learn that in our scenario up to 3 weeks of retention, ReFS is better, hands down, performance gains aside you have little difference with regards to processed capacity. At 5 weeks ReFS is holding its own on capacity vs NTFS but after this point, the benefits of deduplication really start to kick in.

Once more, we can confirm the predictable nature of ReFS vs the varying logarithmic curves of NTFS with deduplication. In essence, so far it boils down to performance vs retention.

What about drive wear and tear & the churn rate, how will that affect any decision?

If using NTFS and dedupe-friendly or uncompressed, there are requirements to read and write significantly more data than ReFS or NTFS using optimal compression, that will indeed translate into additional drive wear and tear, more IOPS, and more data reads and writes which equates to more head movements etc. If we look at the amount of changed data over time for 64K repositories we observe the following:

This is a massively under estimated graph and one that is often ignored, Supportability is a keystone in infrastructure design yet often overlooked outside of cross patching network uplinks, SAN controllers and host failover capacity. What options do we have for disk arrays?

- RAID 5

- RAID 6

- RAID 2.0+ (Huawei Storage rebuild times are insane vs RAID 5/6 (hours and minutes vs days and hours)

- JBOD

- Storage Spaces is great if you are Microsoft however its handling of failed disks in the real world frankly leaves a lot to be desired, from a supportability standpoint, if you have ever experienced a failed caching drive you will know what I am referring too.

So that leaves RAID 6 & Huawei, using RAID 5 with the IOPS and drive capacities required for backup jobs is practically insane, thankfully Microsoft have declared ReFS is not supported on any hardware virtualised RAID system, how is that deduplicating appliance looking now?

Wait, what?

Microsoft do not support RAID 5, 6 or any other type of RAID for ReFS, the official support* for ReFS means you use Storage Spaces, Storage Space Direct or JBOD.

Excerpt from above:

Does that mean every instance of ReFS worldwide using hardware virtualised RAID is technically unsupported by Microsoft?

Technically, Yes. That being said and as previously mentioned, Microsoft have been working very closely with Veeam to resolve issues in ReFS with regards to Blockclone. Veeam have at best, “spearheaded” and at worst, been “early pioneers” of Blockclone technology, when you tie that with their close working relationship, both parties need ReFS to be stable and surely, have common motives for wanting an increased adoption of ReFS.

The obvious Hyper-V benefits are a clear indicator here as to their motivation, so this has in many ways been the saving grace for backups, however, you must bear in mind that technically it is not a supported implementation if you are using hardware virtualised RAID.

At some point this will come into play as their get out of jail card.

So, what are my options?

Microsoft seem to be working to resolve issues that by proxy contribute towards supporting ReFS functionality on RAID volumes thus far, however at some point this could change.

For now, if you have ReFS in play, it can work and will continue to receive the collaborative efforts of Microsoft and Veeam. If you are looking at a new solution, it is impossible to ignore the fact you must use a hardware virtualised RAID alternative, if you want to use ReFS in a future deployment.

ReFS works in these circumstances and there are a plethora of reports as such. I have yet to see a failure in an ReFS restore, that being said, if you follow the Veeam forums, there are some who have seen otherwise. Without platform access it is impossible to tell if there were other factors involved.

As it stands, ReFS for the most part is working well now and is probably your best bet for a primary or indeed secondary backup repository taking all these considerations into effect. As previously discussed, for me, this means a 64K block size ReFS formatted, reverse incremental backup target will be your best all round primary backup storage. With regards to a second copy, ReFS is great for fast transforms however you may be happy trading performance for retention, in which case backup copies can target an NTFS volume.

Plan ahead folks and look at your data before you commit to a solution!

If you have any comments or thoughts on this series, my opinions or anything else, please let me know via comments below or if you prefer, via Twitter or LinkedIn. I was genuinely interested in these results myself and I hope some of you were as well.

If you would like to view all the Power BI Graphs in one place you can do so here:

Additionally they can be viewed online in a browser here:

https://app.powerbi.com/view?r=eyJrIjoiZTZiNDY0MTgtMjFhNC00MjNlLWFlYzQtYmM0NTYwMmRmM2VjIiwidCI6IjhhNDc2ZjRiLTdjMzgtNDE5Mi05OTFkLWUyZjYxMWNkZDllNiIsImMiOjh9&pageName=ReportSection1

*** Update 20/02/2019 – Microsoft ReFS Support on Hardware RAID ***

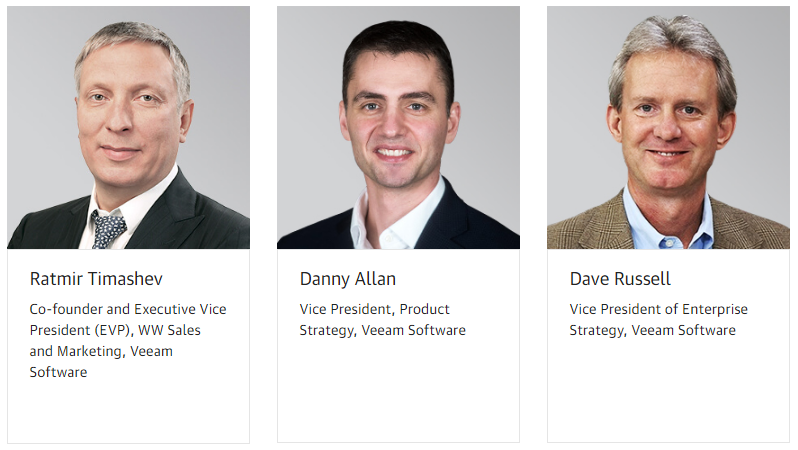

Following some sterling work by Anton Gostev, Andrew Hansen & their respective teams, Microsoft changed their support stance on ReFS running on Hardware RAID.

Excerpt from Gostevs Weekly Digest:

“Huge news for all ReFS users! Together with many of you, we’ve spent countless hours discussing that strange ReFS support policy update from last year, which essentially limited ReFS to Storage Spaces and standalone disks only. So no RAID controllers, no FC or iSCSI LUNs, no nothing – just plain vanilla disks, period. As you know, I’ve been keeping in touch with Microsoft ReFS team on this issue all the time, translating the official WHYs they were giving me and being devil’s advocate, so to speak (true MVP eh). Secretly though, I was not giving up and kept the firm push on them – just because this limitation did not make any sense to me. Still, I can never take all the credit because I know I’d still be banging my head against the wall today if one awesome guy – Andrew Hansen – did not join that Microsoft team as the new PM. He took the issue very seriously and worked diligently to get to the bottom of this, eventually clearing up what in the end appeared to be one big internal confusion that started from a single bad documentation edit.

Bottom line: ReFS is in fact fully supported on ANY storage hardware that is listed on Microsoft HCL. This includes general purpose servers with certified RAID controllers, such as Cisco S3260 (see statement under Basic Disks), as well as FC and iSCSI LUNs on SAN such as HPE Nimble (under Backup Target). What about those flush concerns we’ve talked about so much? These concerns are in fact 100% valid, but guess what – apparently, Microsoft storage certification process has always included the dedicated flush test tool designed to ensure this command is respected by the RAID controller, with data being protected in all scenarios – including from power loss during write – using technologies like battery-backed write cache (for example, S3260 uses supercapacitor for this purpose). Anyway – I’m super excited to see this resolved, as this was obviously a huge roadblock to ReFS proliferation.”

-Craig Rodgers